The Imec research institute described machine learning accelerators using arrays of resistive and magnetic memory cells rather than neural networks to reduce cost and power. Initial results included an MRAM array that lowered power by two orders of magnitude.

It's early days for the promising work. Imec is withholding details of the chips’ architecture and their performance until later in the year when it has its patents filed. The research institute started a machine learning group just 18 months ago as part of its ongoing efforts to expand beyond its core work on silicon process technology.

The introduction of the chips was among a handful of announcements at the opening day of the annual Imec Technology Forum here. Separately, researchers announced progress on a low-power eye-tracking system and an implantable chip to provide new levels of haptics feedback for a prosthetic limb.

An Imec array using MRAM cells was 100 times more energy efficient on self-learning classification tasks based on one benchmark. “Overall using emerging memories is more energy efficient than the CMOS-based machine learning architectures out there,” said An Steegen, executive vice president of semiconductor technology and systems at Imec.

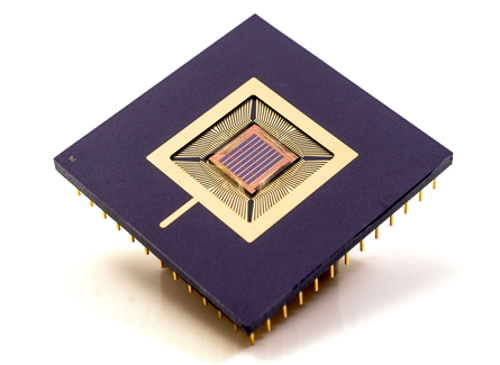

Another chip used an array of cells based on metal-oxide resistive RAMs that Imec and others call OxRAM. The 65nm chip learned to predict patterns by ingesting data on 40 classical flute pieces, then created its own music based on the patterns it learned.

The advantage of the emerging memories is that they enable a data bit to be stored in a single cell, leading to the lowest possible die size. The approach is raising hopes at Imec it someday could be integrated on sensor nodes in the Internet of Things that could benefit from self-learning.

The chip needs a hierarchy of many arrays to execute useful work. Imec would not describe the size of the arrays on its chips.

“OxRAM has been used for memory storage, but our thought is to use it for a probabilistic link between two objects,” said Praveen Raghavan, who manages the program. The demo “feeds an encoding scheme in as an input and gives possible predictions, you feed in an address and read out a prediction as output data,” he said.

“The advantage is you have an extremely dense self-learning chip--by contrast IBM’s True North has a large footprint--but this is extremely high density and extremely low power and mass manufacturable,” he added.

While not a neural network, the technique bears some similarities in its application areas to Long-Short Term Memory (LSTM) nets which predict sequences of events. The OxRAM chip would be “cheaper than an LSTM [accelerator] which can require much more data and needs a big GPU for training,” he said.

The OxRAM approach could be good for uses such as generative adversarial networks, an emerging technique that pits neural nets against each other to speed learning.

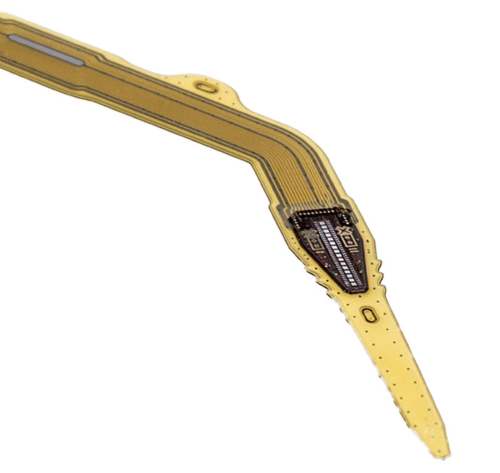

Separately, Imec announced it has developed hardware for an implantable neural chip with 128 recording and 32 stimulating electrodes, about ten times the number of contacts as current devices. The chip, now in animal tests, aims to provide significantly better control and haptic feedback than is possible on today’s prosthetics.

The chip would be able to send signals between the brain and an artificial limb in hundreds of milliseconds. That’s slower than human nerve transmissions but faster than the seconds today’s prosthetics require.

However, Imec so far has prototyped only the hardware. It has not optimized its software which, given the increased number of leads, could generate significant latency.

The project is part of a collaboration with the University of Florida, which is working in a program under the U.S. Defense Advanced Research Projects Agency.

Finally, Imec showed glasses that provide eye tracking by monitoring brain and nerve signals around the eye. So far, the system has lower accuracy than today’s camera-based eye-tracking approaches. However it holds the promise of being significantly lower in cost and power consumption.